This morning, a deeply disturbing article popped up in an aggregator service that I subscribe to, called Neuroscience News.

It referenced an article that originally appeared in The Conversation, a network of not-for-profit media outlets, launched in Australia in 2011, which bills itself as “a unique collaboration between academics and journalists that is the world’s leading publisher of research-based news and analysis”.

And wouldn’t ya know it, Bill Gates thought it was so fabulous that he threw some money at the founders to replicate their project in the US and South Africa:

The article, titled ‘Interactive cinema: how films could alter plotlines in real time by responding to viewers’ emotions’, is about a new interactive film called Before We Disappear.

Here’s the plot synopsis (typos in the original):

“The year 2042, the UK is now a tropical region. Floods, fires, storms, civil unrest are common.

In the late 20s A series of high-level corporate individuals, billionaires, lobbyists, and politicians were assassinated by autonomous weapons built by climate activists. This system assessed the unit of human suffering and calculated the minimum amount of people that were to be targeted to save the most lives. It was the trolly problem but on a global scale.

In the resulting power vacuum the perpetrators became the heroes in this new narrative. A move to a circular economy, of reuse and adaptation to the changing environment.Before We Disappear follows the two people behind the purges of the 2020s, and their personal fallout.”

The article in The Conversation was written by the film’s creator, Richard Ramchurn, whose bio helpfully provides his pronouns, just in case the beard wasn’t a giveaway

Here’s what Mr Ramchurn (he/him) had to say about his film:

“Most films offer exactly the same viewing experience. You sit down, the film starts, the plot unfolds and you follow what’s happening on screen until the story concludes. It’s a linear experience. My new film, Before We Disappear – about a pair of climate activists who seek revenge on corporate perpetrators of global warming – seeks to alter that viewing experience.

What makes my film different is that it adapts the story to fit the viewer’s emotional response. Through the use of a computer camera and software, the film effectively watches the audience as they view footage of climate disasters. Viewers are implicitly asked to choose a side.”

Right, so let me get this straight. Viewers are shown footage (is it newsreel footage or CG?) of various catastrophic incidents that are attributed to climate change (will proof of the connection between climate change and these incidents be provided?), and their emotional reactions are studied to see whether they’re OK with the individuals whose corporate activities are blamed for these incidents (will evidence of their guilt be presented?) are OK with being brutally murdered by ‘climate activists’.

“I chose to use this technology to make a film about the climate crisis to get people to really think about what they are willing to sacrifice for a survivable future…”

You mean, whether we’re prepared to carry out murder, or condone murder carried out by other people, in the name of ‘saving the planet’ (whatever the hell that means) from ‘the climate crisis’ (whatever the hell that means).

“Before We Disappear uses an ordinary computer camera to read emotional cues and instruct the real-time edit of the film. To make this work, we needed a good understanding of how people react to films.

We ran several studies exploring the emotions filmmakers intend to evoke and how viewers visually present emotion when watching. By using computer vision and machine learning techniques from our partner BlueSkeye AI, we analysed viewers’ facial emotions and reactions to film clips and developed several algorithms to leverage that data to control a narrative…

While we observed that audiences tend not to extensively emote when watching a film, BlueSkeye’s face and emotion analysis tools are sensitive enough to pick up enough small variations and emotional cues to adapt the film to viewer reactions.

The analysis software measures facial muscle movement along with the strength of emotional arousal – essentially how emotional a viewer feels in a particular moment. The software also evaluates the positivity or negativity of the emotion – something we call ‘valence’.”

Oooh, I just love the idea of having a camera hooked up to an AI studying my reactions to the murderous activities of self-righteous ‘climate warriors’. This doesn’t sound anything like Two Minutes Hate in Nineteen Eighty-Four. No, not a bit.

“We are experimenting with various algorithms where this arousal and valence data contributes to real-time edit decisions, which causes the story to reconfigure itself. The first scene acts as a baseline, which the next scene is measured against. Depending on the response, the narrative will become one of around 500 possible edits. In Before We Disappear, I use a non-linear narrative which offers the audience different endings and emotional journeys.

Emotional journey

I see interactive technology as a way of expanding the filmmaker’s toolkit, to further tell a story and allow the film to adapt to an individual viewer, challenging and distributing the power of the director.”

It’s always, always about power, with these people. They’re absolutely obsessed with it.

“However, emotional responses could be misused or have unforeseen consequences.”

You mean, like inspiring ‘copycat’ killings? It’s not like that’s ever happened before.

“It is not hard to imagine an online system showing only content eliciting positive emotions from the user. This could be used to create an echo chamber – where people only see content that matches the preferences they already have.”

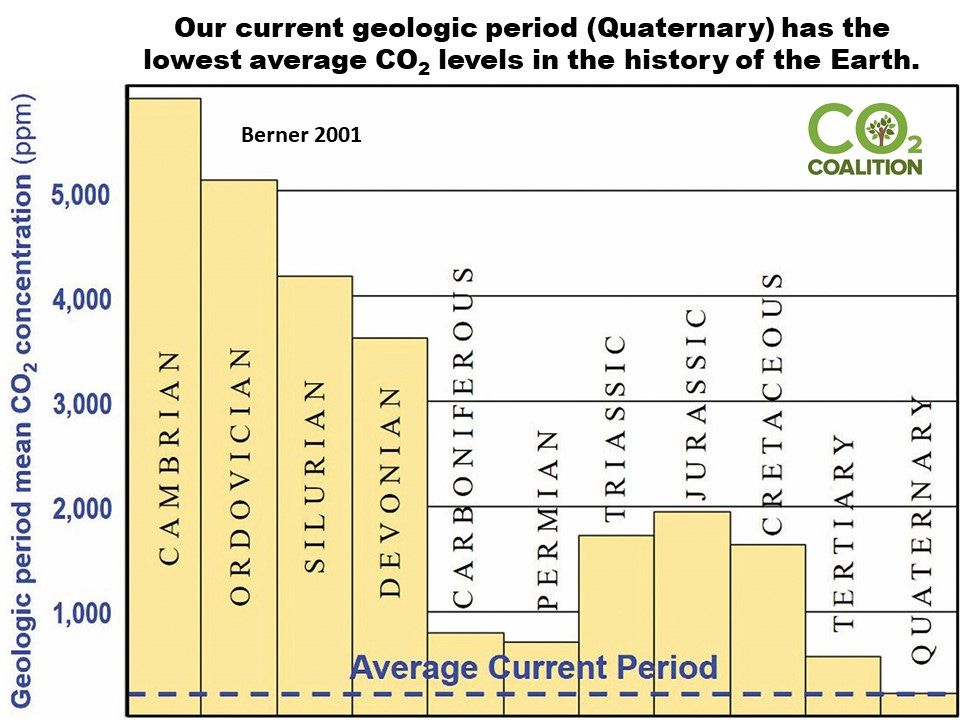

Like a ‘climate crisis’ echo chamber, in which no debate is ever allowed on whether, how and why Earth’s climate is changing, what the consequences (good and bad) of these changes might be, whether the policies proposed to address ‘climate change’ have any possibility of working and what their cost/benefit ratio might be, and what strategies we might use to adapt to these changes, as humans have done in the past (quite successfully, apparently, despite their primitive technologies).

“Or it could be used for propaganda.”

Ya think?

“Our research aims to generate conversation about how users’ emotion data can be used responsibly with informed consent, while allowing users to control their own personal information.”

It’s totally OK if Big Brother spies on you with creepy cameras and AI, as long as you consent.

Yeah, I think I’ll pass. How about you?

All killings are equal, but some killings are more equal than others.

.

I agree, this is an attempt to normalise extreme violence against "environmental vandals".

Almost like a Asch Conformity test.

Not surprised to see this sort of stuff in The Conversation (with Billy G, the philanthropath behind it financially).

I'll stick to the old "linear" films, thank you, keeping me and my emotions to myself. It all started with 'Lassie Come Home' which teared me up no end when I was a nipper. The opening five minutes of the Anne Frank film (with Ben Kingsley as Otto Frank) also did the trick, as did 'Gorillas in the Mist', whilst the German (anti-Nazi, anti-war) film, 'Stalingrad', made me melancohly for days afterwards. On the other hand, Laurel and Hardy still double me up with laughter.